Highlights §

- We on the Google Photos team saw an opportunity to reconnect people with their memories, and we used AI to understand what photos are meaningful and worthy of reminiscing. That said, because a large, diverse group of people use Photos, and because reminiscing is so personal, we knew that we’d need to give individuals control over their experience. (View Highlight)

- In Google Photos we use AI to make your images easier to search and organize by people, places, and things. It also powers features that go beyond search and organization. We use AI to automatically combine photos and videos into a short movie set to music and generate animations from photo bursts. If face grouping is on, AI creates collages featuring a recent photo of someone next to an older photo of them in a similar pose. Personally, I love that these show how someone has grown over time, like this one that shows my daughter at age one and age three. (View Highlight)

- Rediscover This Day resurfaced photos from the same day in previous years, and They Grow Up So Fast compiled photos and videos of a loved one over time into a short movie. These quickly became some of our most loved features; people enjoyed seeing these meaningful moments they might not otherwise revisit. (View Highlight)

- We started working on our Memories feature with the goal to make reminiscing a central and everyday part of the Google Photos experience. With the help of AI, we set out to curate meaningful content from your photo library and display it in an immersive story player. (View Highlight)

- That said, Memories needed to be enjoyable for everyone — no matter the size of their photo library, whether or not they travel, have kids or pets, or if they take hundreds of pictures a week or a few pictures a month. To create an engaging reminiscing experience that everyone would enjoy we needed to make tough decisions about what types of content to include or filter out. (View Highlight)

- In addition, reminiscing is personal and not all memories are welcome. Some memories are intensely sad, upsetting, or painful — such as photos of an ex-partner or a loved one that has died. When we expanded our reminiscing features and automatically brought more photos and videos out of obscurity and put them front and center in the Photos app, the impact of getting things wrong became much higher. (View Highlight)

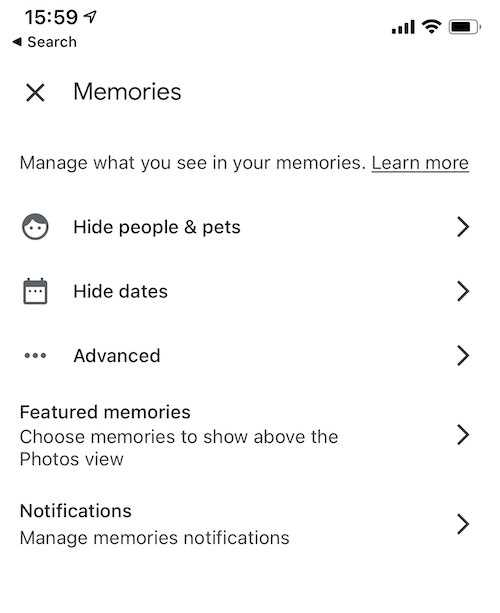

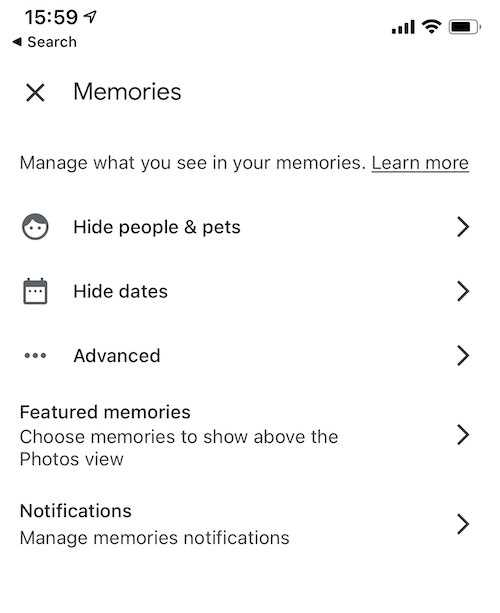

- we knew that we needed to give each individual some control over their experience. AI-driven products are probabilistic by nature and the experience won’t be perfect for everyone, every time. It’s important to allow people to adapt the output to their needs, edit the experience, or even turn it off. (View Highlight)

- To build Memories, we didn’t just start with AI — we started with people. We conducted research with a diverse set of users and those learnings guided how we defined the AI models that power Memories. (View Highlight)

- To start, AI curation for Memories takes a set of photos and filters out the bad, boring, and sensitive stuff — from receipts and parking lots to all the blurry photos you took of your fast-moving toddler before you snapped a sharp one. We do this in two ways: non-pixel-based detection models produce signals and labels (i.e. things, people and pets) that determine how likely it is that a photo could be a receipt or picture of your tax forms that shouldn’t be included; and pixel-based models filter out near-duplicates, and score photos on a set of aesthetic qualities like blurriness and lighting. (View Highlight)

- Then a set of non-machine learning filters based on photo metadata (image resolution, file formats, photo dimensions) filter out things like screenshots and low-resolution photos. We use rule-based filters because AI models simply aren’t needed for this. (View Highlight)

- Even if we could accurately predict the significance of each photo, we can’t accurately predict how someone will feel about revisiting a particular moment. And we’d heard from some people that photos surfaced from our earlier Rediscover This Day feature were sometimes unwelcome. In Rediscover This Day, users could turn off the feature or swipe the card away. (View Highlight)

- With Memories prominently placed at the top of the main app view, we had to be especially sensitive to needs like this. While our AI models do their best to filter out sensitive content, they won’t — and can’t — always get it right. (View Highlight)

- But many photos of funerals and weddings in Western countries share the same objective characteristics: people wearing dark suits, people seated in an assembly or around tables. Funeral traditions are also not universal, and don’t always fit the stereotypical western image. (View Highlight)

- To give people control in Memories, we used existing controls and added new ones. Shortly after we launched Rediscover this Day, Google Photos gave people the ability to hide specific faces in their library. You can hide photos of an ex-partner or an obnoxious in-law, and their face won’t show up in reminiscing features ever again (View Highlight)

- We felt strongly that the ability to hide dates was also important, and research supported this hypothesis. So we built controls to hide dates and date ranges directly from the memory player. And you can also remove memories all together. The result is key controls for people to adjust and personalize their reminiscing.

(View Highlight)

(View Highlight)

(View Highlight)

(View Highlight)