Highlights §

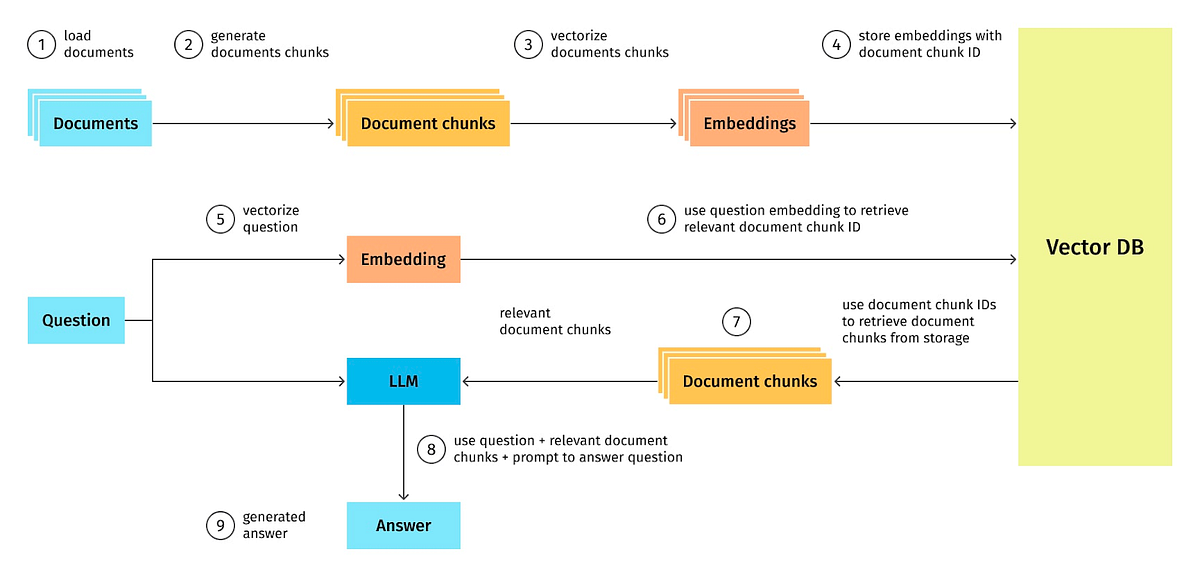

- In this article, we discuss various technical considerations when implementing RAG, exploring the concepts of chunking, query augmentation, hierarchies, multi-hop reasoning, and knowledge graphs. We also discuss unsolved problems & opportunities in the RAG infrastructure space, and introduce some infrastructure solutions for building RAG pipelines. (View Highlight)

(View Highlight)

(View Highlight)- When discussing effective information retrieval in RAG, it is crucial to understand the difference between “relevance” and “similarity.” Whereas similarity is about the similarity in words matching, relevance is about the connectedness of ideas. You can identify semantically close content using a vector database query, but identifying and retrieving relevant content requires more sophisticated tooling. (View Highlight)

- This is an important concept to keep in mind as we explore various RAG techniques below. If you haven’t yet, you should check out Llamaindex’s helpful video on building production RAG apps. This is a good primer for our discussion on various RAG system development techniques. (View Highlight)

(View Highlight)

(View Highlight)- How can you ensure you’re selecting the right chunk? The effectiveness of your chunking strategy largely depends on the quality and structure of these chunks. (View Highlight)

- Determining the optimal chunk size is about striking a balance — capturing all essential information without sacrificing speed. (View Highlight)

- While larger chunks can capture more context, they introduce more noise and require more time and compute costs to process. Smaller chunks have less noise, but may not fully capture the necessary context. Overlapping chunks is a way to balance both of these constraints. By overlapping chunks, a query will likely retrieve enough relevant data across multiple vectors in order to generate a properly contextualized response. (View Highlight)

- One limitation is that this strategy assumes that all of the information you must retrieve can be found in a single document. If the required context is split across multiple different documents, you may want to consider leveraging solutions like document hierarchies and knowledge graphs. (View Highlight)

- A document hierarchy is a powerful way of organizing your data to improve information retrieval. You can think of a document hierarchy as a table of contents for your RAG system. It organizes chunks in a structured manner that allows RAG systems to efficiently retrieve and process relevant, related data. Document hierarchies play a crucial role in the effectiveness of RAG by helping the LLM decide which chunks contain the most relevant data to extract. (View Highlight)

- Document hierarchies associate chunks with nodes, and organize nodes in parent-child relationships. Each node contains a summary of the information contained within, making it easier for the RAG system to quickly traverse the data and understand which chunks to extract. (View Highlight)

- Think of a document hierarchy as a table of contents or a file directory. Although the LLM can extract relevant chunks of text from a vector database, you can improve the speed and reliability of retrieval by using a document hierarchy as a pre-processing step to locate the most relevant chunks of text. This strategy improves retrieval reliability, speed, repeatability, and can help reduce hallucinations due to chunk extraction issues. Document hierarchies may require domain-specific or problem-specific expertise to construct to ensure the summaries are fully relevant to the task at hand. (View Highlight)

- Let’s take a use case in the HR space. Let’s say that a company has 10 offices and each office has their own country-specific HR policy, but uses the same template to document these policies. As a result, each office’s HR policy document has roughly the same format, but each section would detail country-specific policies for public holidays, healthcare, etc. (View Highlight)

- In a vector database, every “public holidays” paragraph chunk would look very similar. In this case, a vector query could retrieve a lot of the same, unhelpful data, which can lead to hallucinations. By using a document hierarchy, a RAG system can more reliably answer a question about public holidays for the Chicago office by first searching for documents that are relevant to the Chicago office. (View Highlight)

- It should become increasingly clear that most of the work that goes into building a RAG system is making sense of unstructured data, and adding additional contextual guardrails that allow the LLM to make more deterministic information extraction. I think of this as akin to the instruction one needs to give to an intern to prepare them on how to reason through a corpus of data when they start on the job. Like an intern, an LLM can understand individual words in documents and how they might be similar to the question being asked, but it is not aware of the first principles needed to piece together a contextualized answer. (View Highlight)

- Knowledge graphs are a great data framework for document hierarchies to enforce consistency. A knowledge graph is a deterministic mapping of relationships between concepts and entities. Unlike a similarity search in a vector database, a knowledge graph can consistently and accurately retrieve related rules and concepts, and dramatically reduce hallucinations. The benefit of using knowledge graphs to map document hierarchies is that you can map information retrieval workflows into instructions that the LLM can follow. (i.e. to answer X question, I know I need to pull information from document A and then compare X with document B). (View Highlight)

- Knowledge graphs map relationships using natural language, which means that even non-technical users can build and modify rules and relationships to control their enterprise RAG systems. For example, a rule can look like: ‘When answering a question about leave policies, first refer to the correct office’s HR policy document, and then within the document, check the section on holidays.’’ (View Highlight)

- Query augmentation addresses the issue of badly phrased questions, a common issue in RAG that we discuss here. What we are solving for here is to make sure any questions that are missing specific nuances are given the appropriate context to maximize relevancy. (View Highlight)

- Badly phrased questions can often be due to the complicated nature of language. For example, a single word can mean two different things based on the context in which it is used. As Agustinus (Head of AI at CarSales.AU) notes, this is largely a domain-specific issue. Consider this example: is “fried chicken” more similar to ‘chicken soup’ or ‘fried rice?’ “The answer varies based on context. If ingredients are the focus, ‘fried chicken’ aligns closest with ‘chicken soup.’ But from a preparation perspective, it’s closer to ‘fried rice.’ Such interpretations are domain-centric.” (View Highlight)

- What if you want to contextualize an LLM with company or domain-specific words? An easy example of this is company acronyms (i.e. ARP means Accounting Reconciliation Process). Further, consider a more complicated example from one of our clients, a travel agency. As a travel company, our client needed to make a distinction between the phrases ‘near the beach’ and ‘beachfront’. To most LLMs, these terms are fairly indistinguishable. In the context of travel, however, a beachfront house and a house near the beach are very different things. Our solution was to map ‘near the beach’ properties to a specific segment of properties, and ‘beachfront’ properties to another by pre-processing the query and adding company-specific context to refer to the appropriate segments. (View Highlight)

(View Highlight)

(View Highlight)