Highlights §

- ControlNet provides a minimal interface allowing users to customize the generation process up to a great extent. With ControlNet, users can easily condition the generation with different spatial contexts such as a depth map, a segmentation map, a scribble, keypoints, and so on! (View Highlight)

- ControlNet was introduced in Adding Conditional Control to Text-to-Image Diffusion Models by Lvmin Zhang and Maneesh Agrawala. It introduces a framework that allows for supporting various spatial contexts that can serve as additional conditionings to Diffusion models such as Stable Diffusion. (View Highlight)

- Training ControlNet is comprised of the following steps:

- Cloning the pre-trained parameters of a Diffusion model, such as Stable Diffusion’s latent UNet, (referred to as “trainable copy”) while also maintaining the pre-trained parameters separately (”locked copy”). It is done so that the locked parameter copy can preserve the vast knowledge learned from a large dataset, whereas the trainable copy is employed to learn task-specific aspects.

- The trainable and locked copies of the parameters are connected via “zero convolution” layers (see here for more information) which are optimized as a part of the ControlNet framework. This is a training trick to preserve the semantics already learned by frozen model as the new conditions are trained.

Pictorially, training a ControlNet looks like so:

The diagram is taken from here. (View Highlight)

The diagram is taken from here. (View Highlight)

- Every new type of conditioning requires training a new copy of ControlNet weights. The paper proposed 8 different conditioning models that are all supported in Diffusers! (View Highlight)

- For inference, both the pre-trained diffusion models weights as well as the trained ControlNet weights are needed. For example, using Stable Diffusion v1-5 with a ControlNet checkpoint require roughly 700 million more parameters compared to just using the original Stable Diffusion model, which makes ControlNet a bit more memory-expensive for inference. (View Highlight)

- Because the pre-trained diffusion models are locked during training, one only needs to switch out the ControlNet parameters when using a different conditioning. This makes it fairly simple to deploy multiple ControlNet weights in one application as we will see below. (View Highlight)

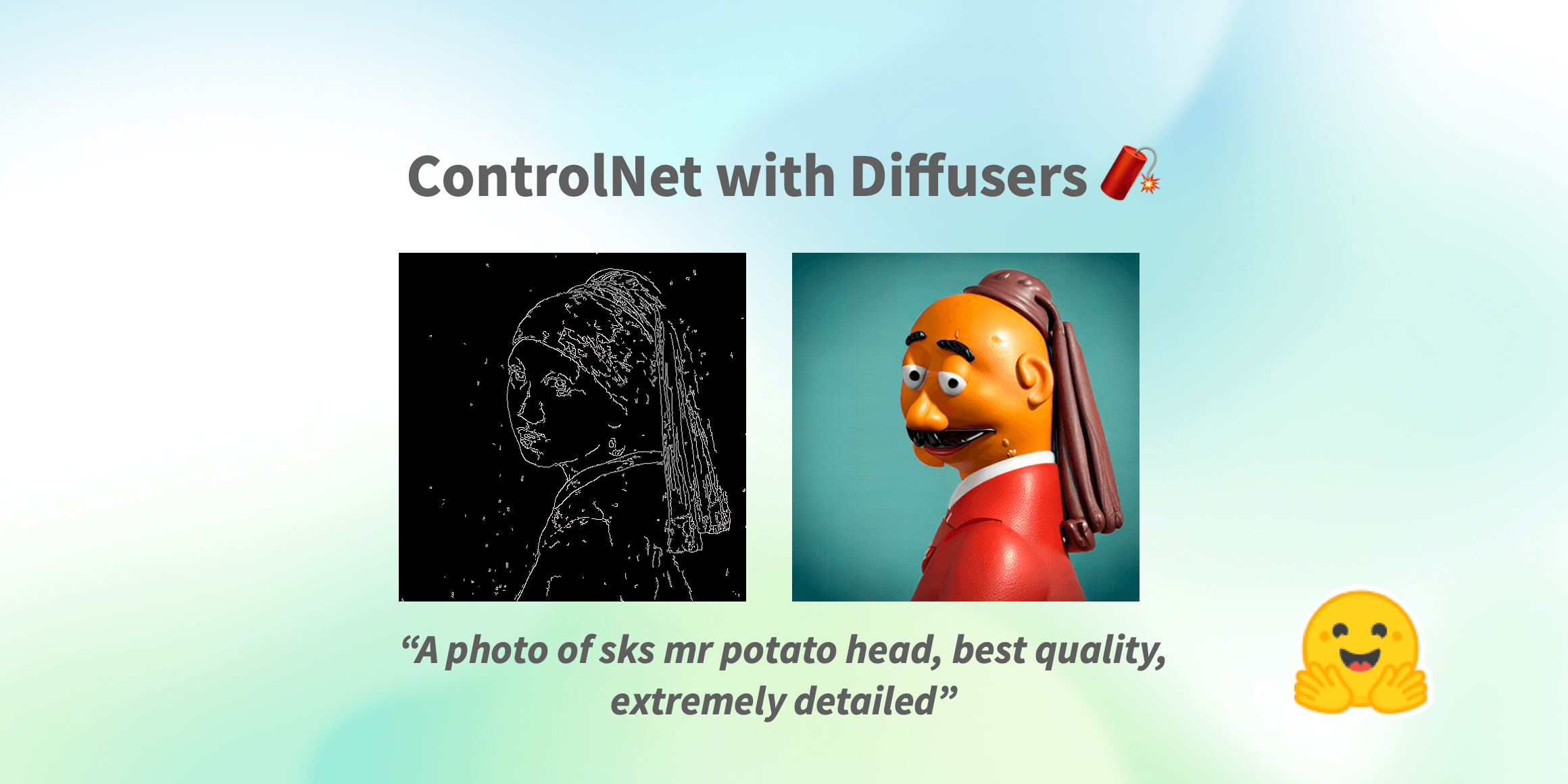

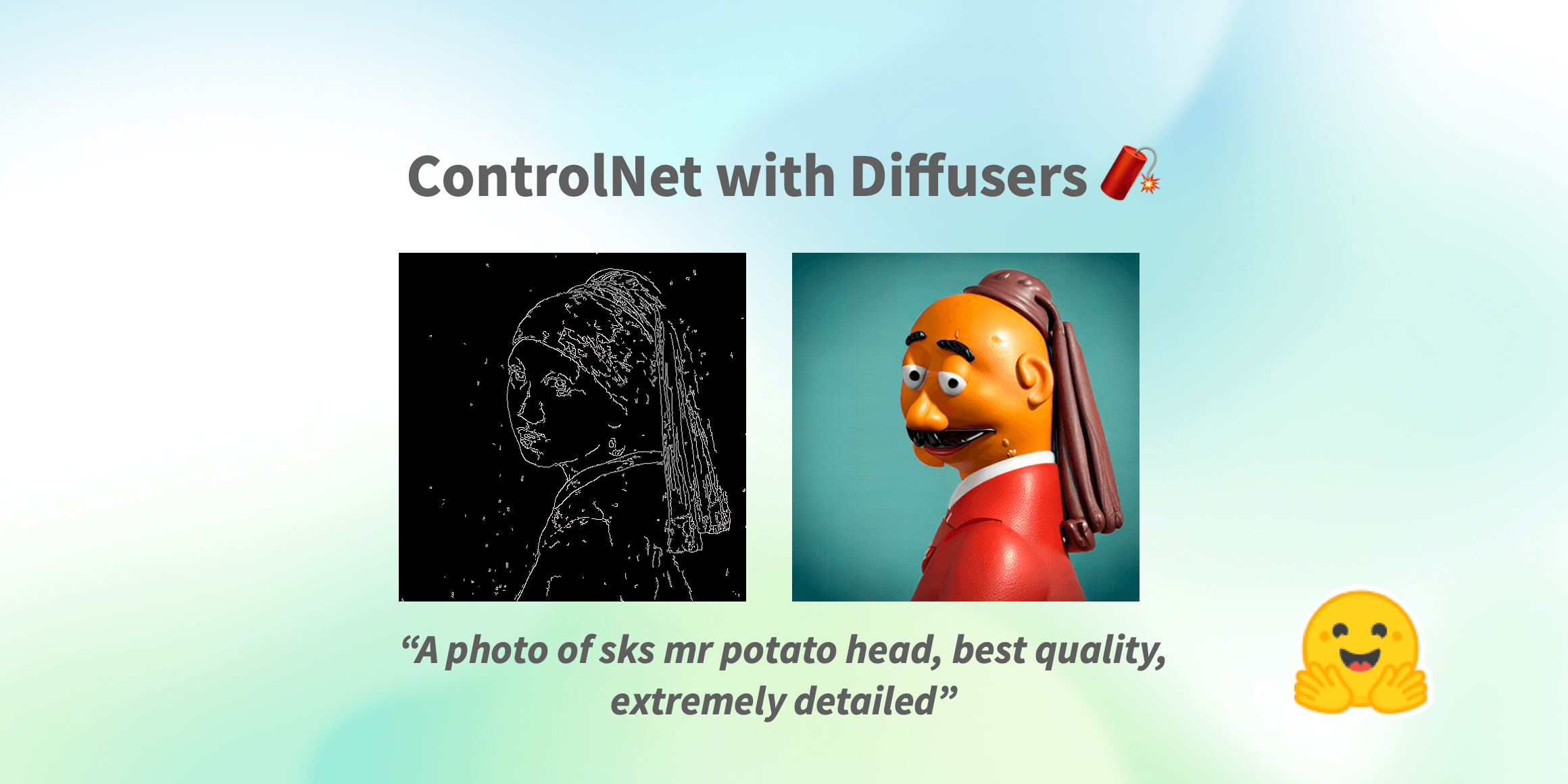

- We will explore different use cases with the

StableDiffusionControlNetPipeline in this blog post. The first ControlNet model we are going to walk through is the Canny model - this is one of the most popular models that generated some of the amazing images you are libely seeing on the internet. (View Highlight)

- Instead of using Stable Diffusion’s default PNDMScheduler, we use one of the currently fastest diffusion model schedulers, called UniPCMultistepScheduler. Choosing an improved scheduler can drastically reduce inference time - in our case we are able to reduce the number of inference steps from 50 to 20 while more or less keeping the same image generation quality. More information regarding schedulers can be found here. (View Highlight)

- Remember that during inference diffusion models, such as Stable Diffusion require not just one but multiple model components that are run sequentially. In the case of Stable Diffusion with ControlNet, we first use the CLIP text encoder, then the diffusion model unet and control net, then the VAE decoder and finally run a safety checker. Most components are only run once during the diffusion process and are thus not required to occupy GPU memory all the time. By enabling smart model offloading, we make sure that each component is only loaded into GPU when it’s needed so that we can significantly save memory consumption without significantly slowing down infenence. (View Highlight)

- Finally, we want to take full advantage of the amazing FlashAttention/xformers attention layer acceleration, so let’s enable this! If this command does not work for you, you might not have

xformers correctly installed. In this case, you can just skip the following line of code. (View Highlight)

The diagram is taken from here. (View Highlight)

The diagram is taken from here. (View Highlight)