Why Chatbots Are Not the Future §

Highlights §

- I had no choice but to start into an unfiltered, no-holds-barred rant about chatbot interfaces. (View Highlight)

- When I go up the mountain to ask the ChatGPT oracle a question, I am met with a blank face. What does this oracle know? How should I ask my question? And when it responds, it is endlessly confident (View Highlight)

- Good tools make it clear how they should be used. And more importantly, how they should not be used. (View Highlight)

- Compare that to looking at a typical chat interface. The only clue we receive is that we should type characters into the textbox. The interface looks the same as a Google search box, a login form, and a credit card field.

Of course, users can learn over time what prompts work well and which don’t, but the burden to learn what works still lies with every single user. When it could instead be baked into the interface. (View Highlight)

- everything you put in a prompt is a piece of context. (View Highlight)

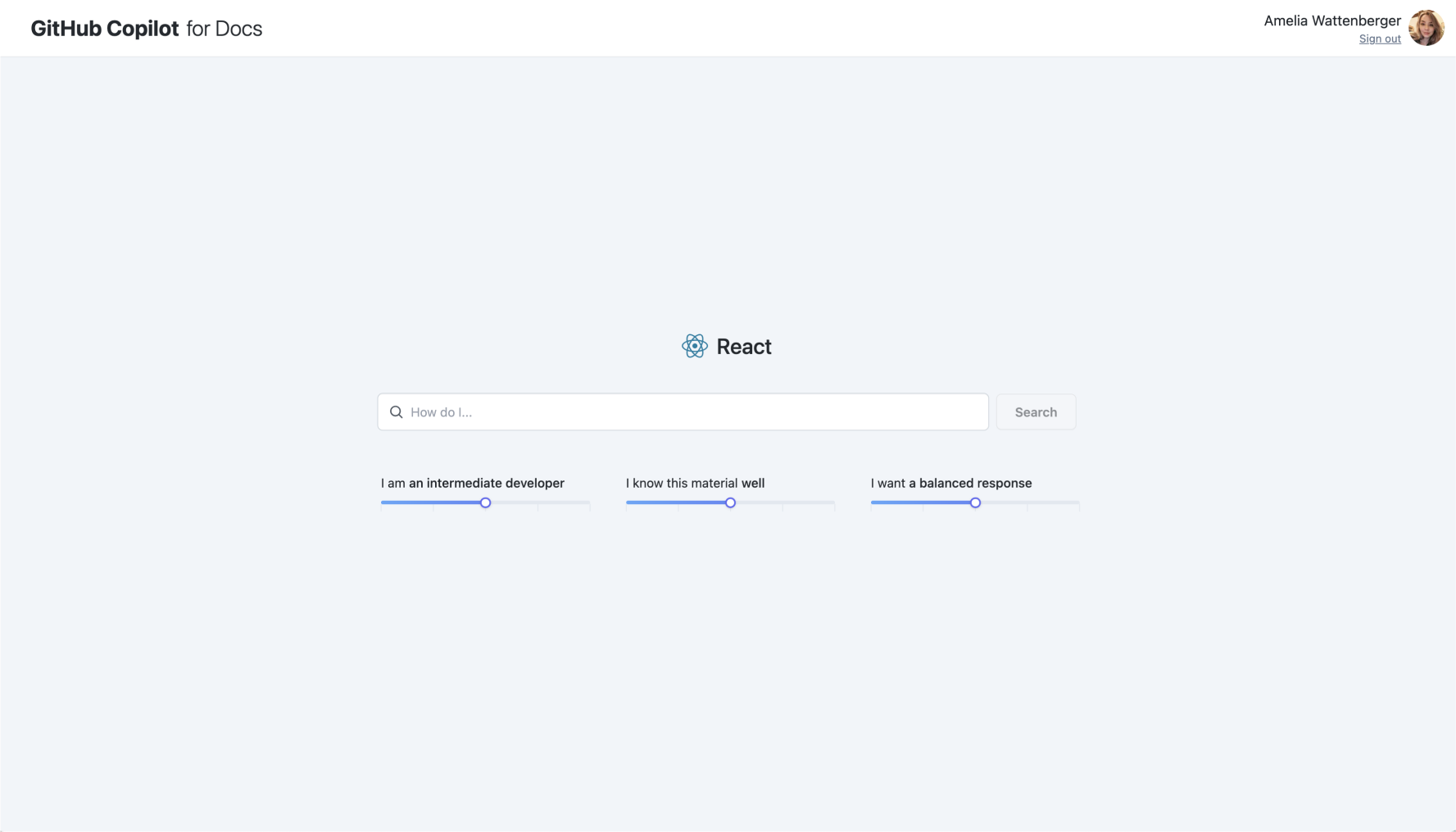

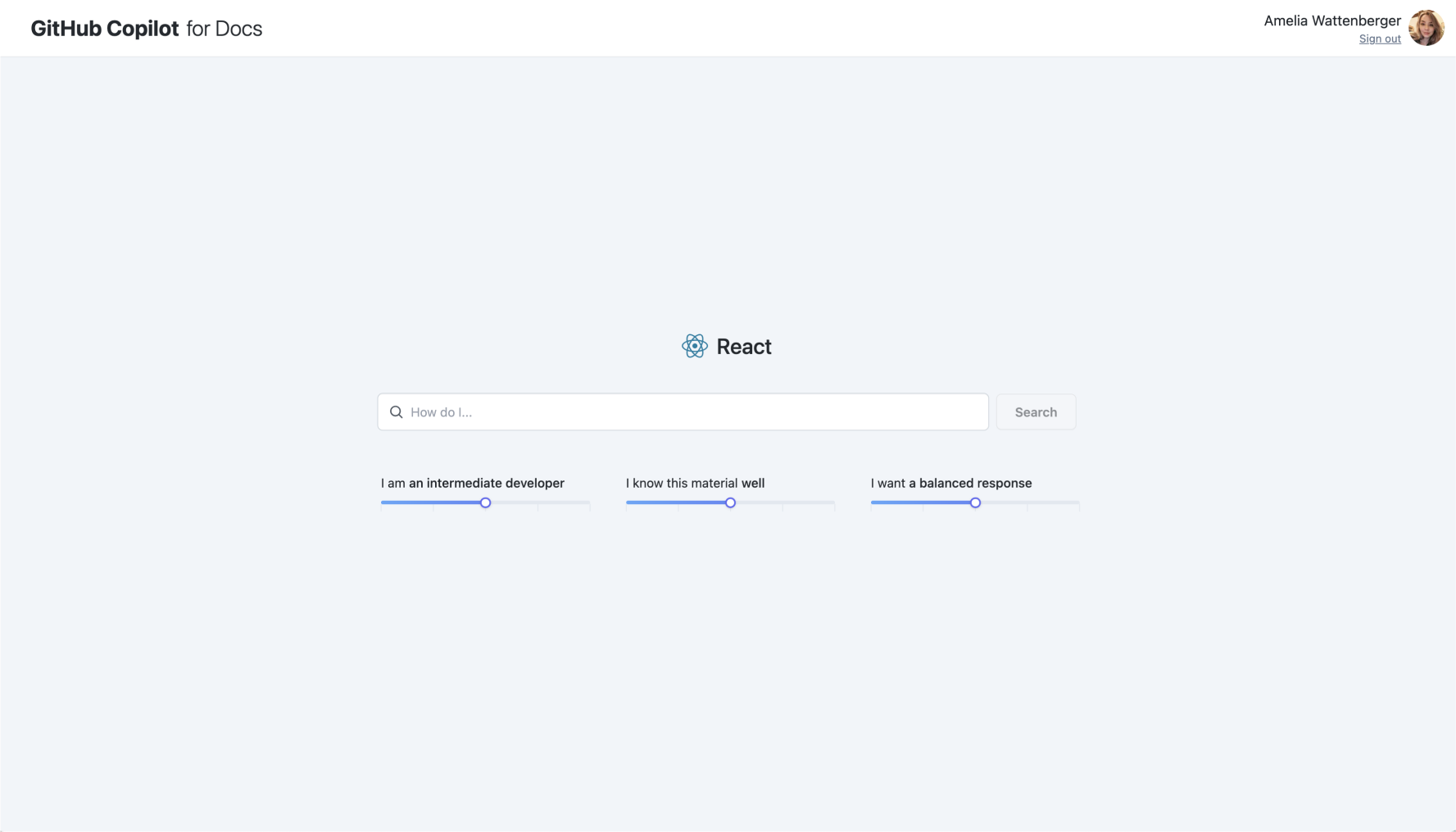

- I think of it in two parts: finding the most relevant information to a user’s question and synthesizing an answer using that information. When we synthesize an answer, we have a chance to tailor the response to the specific question-asker. As a rough first exploration with this idea, we added a few sliders:

(View Highlight)

(View Highlight)

- Natural language is great at rough direction: teleport me to the right neighborhood. But once ChatGPT has responded, how do I get it to take me to the right house? (View Highlight)

- two distinct actions: up close, smooshing paint around on the canvas and stepping back to evaluate and plan. These two modes (implementing and evaluating) are present in any craft: programming, writing, you name it. (View Highlight)

- Good tools let the user choose when to switch between implementation and evaluation. When I work with a chatbot, I’m forced to frequently switch between the two modes. I ask a question (implement) and then I read a response (evaluate). There is no “flow” state if I’m stopping every few seconds to read a response. The wait for a response is also a negative factor here. As a developer, when I have a lengthy compile loop, I have to wait long enough to lose the thread of what I was doing. The same is true for chatbots. (View Highlight)

- There’s an ongoing trend pushing towards continuous consumption of shorter, mind-melting content (View Highlight)

- When a task requires mostly human input, the human is in control. They are the one making the key decisions and it’s clear that they’re ultimately responsible for the outcome.

But once we offload the majority of the work to a machine, the human is no longer in control (View Highlight)

- There’s a No man’s land where the human is still required to make decisions, but they’re not in control of the outcome. At the far end of the spectrum, users feel like machine operators: they’re just pressing buttons and the machine is doing the work. There isn’t much craft in operating a machine. (View Highlight)

- believe the real game changers are going to have very little to do with plain content generation. Let’s build tools that offer suggestions to help us gain clarity in our thinking, let us sculpt prose like clay by manipulating geometry in the latent space, and chain models under the hood to let us move objects (instead of pixels) in a video. (View Highlight)

![]()

(View Highlight)

(View Highlight)